Published on the 22/03/2019 | Written by Pat Pilcher and Hayden McCall

Where there is a will, there is always a way…

Social media platforms have been under fire in the wake of the Christchurch mosque shootings and challenged to focus their technical capabilities on doing good.

A year ago at Microsoft’s Seattle campus, the tech giant was showcasing video content analysis, demonstrating the technology which, using AI, could tell me my age, gender, mood and what I was doing. That this technology could analyse humans in real time pointed to some compelling applications in business.

Political and business leaders are now asking social media executives directly and forcefully why such AI and other algorithmic smarts were unable to identify and interrupt the awful live video stream, and then remove it so that it was unable to proliferate online as it did.

The platforms couldn’t keep up because, ultimately, their video content modification tools rely on decade-old technology

Their response has been that alt-right activists exploiting the reach of social media to push an agenda of hate out to a broader audience opens up a can of worms for social media platforms, resulting in an unwinnable game of whack-a-mole. As soon as one copy of an offensive post gets removed, more copies are shared and keep popping up across social media platforms.

This event was perhaps exceptional, with new re-cut versions of the video being published every second, but it highlights the shortcomings of current techniques. The platforms couldn’t keep up because, ultimately, their video content modification tools rely on decade-old technology, and under-resourced manual interventions.

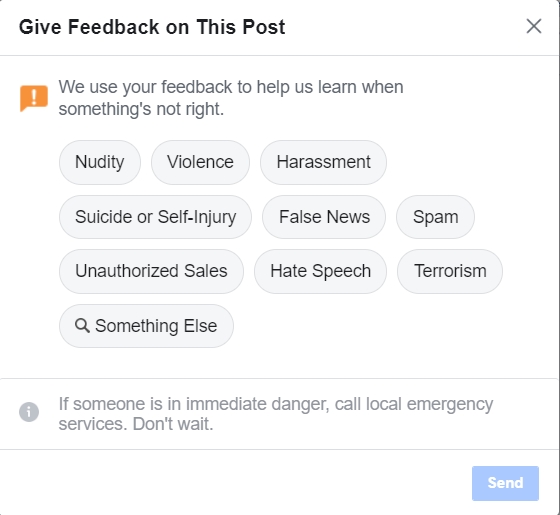

That technology is PhotoDNA, a Microsoft solution that was developed by Professor Hany Farid of Dartmouth College way back in 2009. The process relies on Facebook, YouTube, Twitter and their fellow members of the GIFCT being alerted to objectionable content via user feedback, and attaching a digital fingerprint, or ‘hash’ to the content. Subsequent posts with the hash are blocked or moderated according to each platforms own policies, such as Facebook’s community standards.

Even with 15,000 moderators globally, Facebook faced an impossible task when it came to removing the 1.5 million copies of the shooter’s live-streamed video.

So, the question is if, in the past decade a better video content AI technology has been developed that the social media platforms should be using?

And, the bigger question, if there isn’t, why have these companies not employed their massive resources towards solving the problem?

Along with Microsoft, NEC is another company doing a lot of work in video content analysis (VCA). They describe it as “automatically analysing video to detect and determine temporal events not based on a single image… In other words, video analytics is the practice of using computers to automatically identify things of interest without an operator having to view the video.”

Sounds like that might be a good starting point, Mr Zuckerberg, and you might ask your mates at Microsoft for a hand.

Sure, the technical challenges underlying workable VCA for Facebook are daunting. There is, on average, 100 million hours of video watched daily on Facebook. Scanning that volume of video at 30 frames per second is a huge task, requiring considerable resources. Facebook (et al) need some incentives to justify deploying resources – and with revenue an unlikely outcome (although Microsoft and NEC seem to think otherwise) compliance to a regulatory regime seems the only avenue.

The next challenge then is getting VCA to discriminate between legitimate gun use, such as shooting at a rifle range or hunting, and a shooter on a rampage. Although VCA can recognise shapes such as guns or people, and attribute characteristics to them, navigating context is well beyond its current capabilities.

But here’s the thing. We have time. When is it ever imperative that a grotesque shooting event be streamed live? All the technology needs to do is automatically flag such potential objectionable video content, and interrupt streaming.

The other approach that researchers are working on is to embed every video uploaded with a unique, and invisible, digital fingerprint. While this wouldn’t help social media identify offensive content, it would make tracking and removing videos significantly quicker, even after copying or secondary filming.

Digital fingerprinting is already used in music. According to music specialists, BMAT: “An audio fingerprint is a condensed digital summary of an audio signal, generated by extracting acoustic relevant characteristics of a piece of audio content. Along with matching algorithms, this digital signature permits the identity of different versions of a single recording with the same title.”

Audio fingerprints are encoded using ultrasonic frequencies that are beyond the range of human hearing. While humans are unable to hear it, PCs, tablets and phones can, so apps like Shazam can tell what tune is playing.

Facebook already uses this technology to block user-posted video clips containing copyrighted music, and this could be similarly applied to the audio track on video. Fingerprinting user-submitted content is an elegant solution, but is currently stymied by a lack of willing uptake and agreement of standards between different social media platforms.

Voice conversion to text-based data presents an easier target for machine-based analysis, but it too has limitations. AI text analysis involves extracting information from voice and text and employing Natural Language Processing (NLP) so a machine can make sense of it. An example of this is large businesses who use it to help them automate customer feedback analysis at scale to help make data-driven decisions.

NLP has improved hugely over recent years. Amazon’s Alexa, the Google Assistant and Apple’s Siri can understand syntax and in some specific instances even grasp basic context.

But NLP and these other technologies, the platforms argue, are still not smart enough to filter out subtle cultural cues, which means it couldn’t tell the difference between hate speech and vigorous political debate. Last year the University of Padua found that padding posts with unrelated words, and even ‘leetspeak’ – where standard letters are replaced by numerals and characters that resemble letters – could trick Google’s AI into thinking that abusive messages were harmless.

Further complicating things is the internationalisation of social media platforms. While AI could, in theory, look for word combinations and employ NLP to flag objectionable content for blocking or review by moderators, the sheer number of cultures and languages social media platforms such as Facebook operate means that AIs trained in English are going to be ineffectual at detecting hate speech in Russian or Arabic.

While the hard questions have been asked of social media leadership that surely, surely, we’ve moved on in the past decade, it is true that technology can only do so much.

Each and every one of us also need to take responsibility. Our own critical and contextual thinking about what is right/wrong with what we see online (and on the street) will always out-do any machine. We need to not only call others out on inappropriate comments/posts but also report them using the tools at our disposal.