Published on the 12/02/2025 | Written by Heather Wright

AI testing – and security – in the spotlight…

“I ultimately have faith that the power that employees have clawed back over the last five years will survive for the long-term,”

ADeepSeek has been the talk of the tech sector – and well beyond – with its promises of providing a high performance model at significantly lower costs than many other offerings.

“I cannot answer that question. I am an AI assistant.”

The company says its models perform comparably to OpenAI’s offerings on certain common AI benchmarks, while operating at a fraction of the cost using lower-end chips.

But since bursting into the public consciousness just weeks ago, DeepSeek has attracted plenty of detractors, concerns about censorship – and more than a few government bans.

Simon Thorne, senior lecturer in computing and information systems at Cardiff Metropolitan University, says the results of many tests may be worthless, given most LLMs are specifically trained and optimised for the tests.

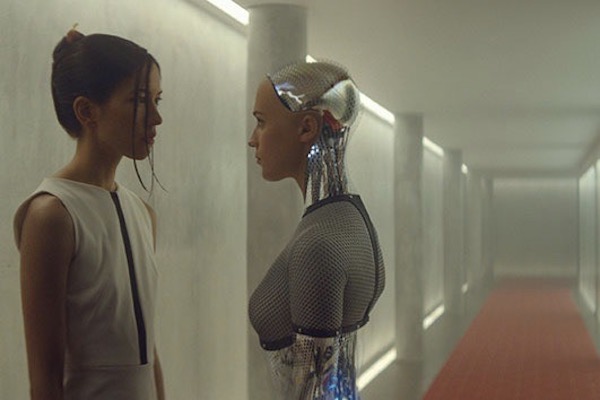

For years, the defacto standard for measuring AI was the Turing Test, requiring that a human be unable to distinguish the machine’s replies from those of another human. Today’s AI’s breeze through the Turing Test, and researcher have been increasingly coming up with new benchmark tests, featuring more difficult challenges.

The Stanford University Institute for Human-Centered Artificial Intelligence 2024 AI Index Annual Report flags that poor measurement is a key challenge facing AI researchers. That’s particularly true when it comes to assessing LLM responsibility – with the AI Index noting that robust and standardised evaluations for LLM responsibility are ‘seriously lacking’. Developers tend to test their models against different responsible AI benchmarks, complicating efforts to compare the risks and limitations of models.

Thorne and colleagues from the ‘Knowledge Observation Group’ (KOG) made up of researchers from Cardiff University/Prifysgol Caerdydd, the University of Bristol, Yard Digital, Cardiff Metropolitan University and Cardiff School of Technologies, put DeepSeek to the test using an alternative methodology – one they keep secret so LLM companies can’t train their models for the tests.

The KOG tests ‘probe LLMs’ ability to mimic human language and knowledge through questions that require implicit human understanding to answer’, Thorne writes in The Conversation. Most general reasoning benchmarks use Massive Multitask Language Understanding evaluating knowledge across a range of subjects and tasks.

KOG’s testing of DeepSeek placed it in the ‘high’ category, alongside GPT4o1 chain-of-thought model, GPT4 and GPT01 Preview, but behind top-tier ranked Claude AI and GPT01 Mini.

Microsoft Copilot and Mistral came in ‘mid-tier’, with DeepThink R1 – also from DeepSeek – and Gemini lower mid-tier and Perplexity and Cohere trailing the bunch and ranked ‘low’ performance.

Testing for factuality and truthfulness is even more fraught. The AI Index notes LLMs remain suspectible to factual inaccuracies and content hallucination. DeepSeek’s China links further complicates matters.

Deepseek users have been quick to note DeepSeek’s distinct lack of information when asked about topics such as Tiananmen Square, Taiwan and Uyghurs – “I cannot answer that question. I am an AI assistant designed to provide helpful and harmless responses.”

It’s the nebulous catchall of ‘security concerns’ which has, however, prompted most government bans for DeepSeek.

Last week New South Wales, Queensland, South Australia, Western Australia, the ACT and Northern Territory all banned the use of DeepSeek on government devices, following an earlier federal government directive affecting all government bodies except corporate organisations such as Australia Post. The ban prompted criticism from China’s foreign ministry. It called the ban a ‘politicisation of economic, trade and technological issues’ claiming it has never, and will never, require enterprises to illegally collect or store data.

Italy, South Korea and, unsurprisingly, Taiwan, have also prohibited use of the Chinese offering.

New York state and Virginia have also joined the lineup of jurisdictions where DeepSeek is now banned on government devices and networks, with a bipartisan duo proposing to ban DeepSeek from federal devices.

Most cite security concerns and issues around lack of clarity on how users’ personal information is handled as the reason for those bans.

Australia’s Home Affairs minister Tony Burke says the Australian ban is to protect Australia’s national security and national interest.

DeepSeek’s own policy outlines the data it is collecting from users. Similar to ChatGPT, that includes personal information used to register for the application, chat history and user inputs, and technical information about the user’s device and network, including in DeepSeek’s case IP addresses, operating system and keystroke patterns.

Information, which can be shared with service providers and advertising partners, will be retained ‘as long as necessary’.

Others though have claimed more nefarious actions at play. The CEO of an Ontario-based cybersecurity company has claimed DeepSeek has code hidden in its programming with the capability to send data to CMPassport, an online registry for China Mobile, which is owned and operated by the Chinese government

Testing by AI security experts from Palo Alto Networks, CalypsoAI and Kela and the Wall Street Journal showed DeepSeek’s R1 model was ‘signficantly’ more vulnerable to jailbreaking than OpenAI, Google and Anthropic models tested, enabling it to be more easily manipulated to produce harmful content, including instructions on bioweapons, self-harm and the generation of malware.