Published on the 08/03/2018 | Written by Martin Norgrove, CTO at NOW Consulting

NOW Consulting’s CTO Martin Norgrove advises on how to approach big data projects…

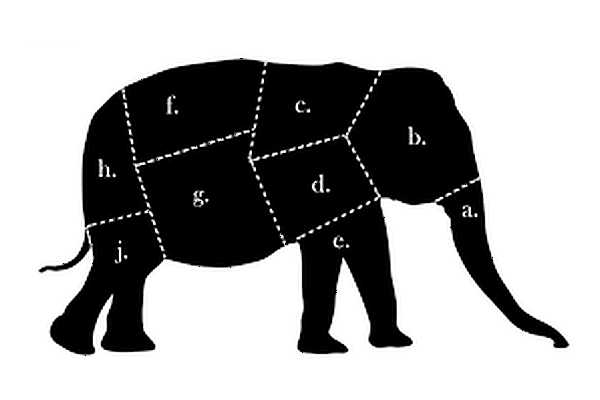

How do you eat an elephant? One small bite at a time. This encapsulates why an agile, iterative approach to data warehousing substantially improves the opportunity for success because it enables rapid delivery of early value. It equips development teams to understand business problems directly from those experiencing them. And it provides the headroom to fail fast and learn from easily-rectifiable mistakes. Back in the gnarly old days, data warehousing projects earned themselves an unsavoury reputation. There are a few reasons for that, among them the simple novelty of an idea which needed time to mature. Another is the typical approach: a ‘big bang’, waterfall-style, all-in style, but one which also had a certain outcome but no real idea of how to get there. It was an attempt to boil the ocean. Or swallow the elephant whole. These days, things have changed (well, some things have – some of our clients must still use the term ‘data warehouse’ sparingly for fear of opening old wounds). Data is widely recognised for its value. Data warehouses are accepted as an essential foundation to enable analytics and information to flow from data. And the way data warehouses are done has changed. Let’s see how and why. DevOps before it was DevOps When exploring, taking steps and making missteps is part of the process. If the delivery cycle is short, with rapid iterations and the establishment of ‘minimal viable product’, you can pivot. As new characteristics are discovered in the data, the trajectory of the project can change. Getting to the eventual outcome isn’t a predetermined, singular path (which, in those gnarly old days, might have been pursued with wishful thinking, blind ambition, blissful ignorance or a combination of any one of the three). “When exploring, taking steps and making missteps is part of the process.” Instead, the route to success is guided by ‘actuals’ as they emerge. What is the nature of the data? What does it include? How can it be used? Where can it be used? What happens when it is combined? Data is like cooking the elephant; when you mix multiple ingredients, unexpected flavours can result. Mix various data sources and you can discover the unexpected. While this may sound terribly theoretical, and even risky – after all, show us the executive who is willing to commit funds to a project with an uncertain path to value – this is how the best data warehousing successes come about. Take East Health as an example: the company’s top brass recognised that it had a data problem and a data opportunity. It set off on a path which would take years, but which has delivered unequivocal value. Senior business analyst Jody Janssen says ‘We’ve realised we had no idea of what was in the systems simply because we had no way of getting it out.’ Only once the project was well underway, using iterative processes, was it possible to fully comprehend the data. And fully comprehend what could be done with it. These days, the process of iterative development which allows for making mistakes and rapidly correcting them is known as DevOps. Back when WhereScape RED was first conceived, it didn’t even have a name – but today, the process of iterative development for data analytics is known as DataOps. Lessons from The Lean Startup Instead of focusing excessively on delivering perfection, it shifts to showing what’s possible. That gets people fired up. It provides the best chance of making the greatest number of people happy. It accelerates engagement between developers and the business community (rather than a standoff which can happen in a data warehouse development team seen as a gated community in waterfall projects). Because the outcome isn’t set in stone, the very people who will use the data warehouse can influence its direction and functions. All along the way, refinements are made, iterative steps are taken (and sometimes walked back if it doesn’t work – at the cost of a few days, rather than months and millions). The other lesson is to focus on a pressing, important business problem. Do it at the outset. Solve that one pressing issue and you have delivered value. And don’t be overly ambitious: there’s no point targeting machine learning before the infrastructure is in place. Failing fast in an iterative step is one thing, failing on a major objective another. Software and people Approached the right way, data warehousing projects are transformative. They are how the new oil is extracted, refined and turned into fuel for your business. Martin Norgrove is CTO at NOW Consulting, a data and analytics services company. With a BSc in Chemistry and Physics from The University of Auckland, Martin initially started working life as a lab technician at Carter Holt Harvey before discovering his passion for all things data. He’s worked for numerous high-profile brands including Spark, Z Energy, ASB, Auckland Council and Lotto, and thinks the future is very bright.

One of the driving forces behind the development of the data warehouse automation tool WhereScape RED was recognition of a simple principle: you don’t know what you don’t know. The founders realised that the only way to be successful with a data warehouse is to accept that it will be a voyage of discovery. Like any voyage of discovery, there isn’t a roadmap, much less Google Maps, showing the way.

Back in 2011, Eric Ries published The Lean Startup: How Today’s Entrepreneurs Use Continuous Innovation to Create Radically Successful Businesses. Quite a title, but the book resonates because it explains how getting value in front of customers as fast as possible is the best way to get them on board. It’s the same with a data warehousing project, particularly in the context of that hangover from failed initiatives in days gone by. When value is rapidly demonstrated, the business gets keen for more.

Tackling a data warehouse project iteratively depends to a large extent on having the right tool. Software like WhereScape RED encourages the method because it has the frameworks and automation built in; there is a certain amount of data plumbing which must be done to enable data to flow. RED works on the Pareto principle: it does 80 percent of the work, so the developer can focus on the 20 percent where the real difference is made (this is how RED makes developers 4 to 10 times more productive).